Autonomous machines are reshaping our world at an unprecedented pace, demanding urgent ethical frameworks to guide their development and deployment responsibly. 🤖

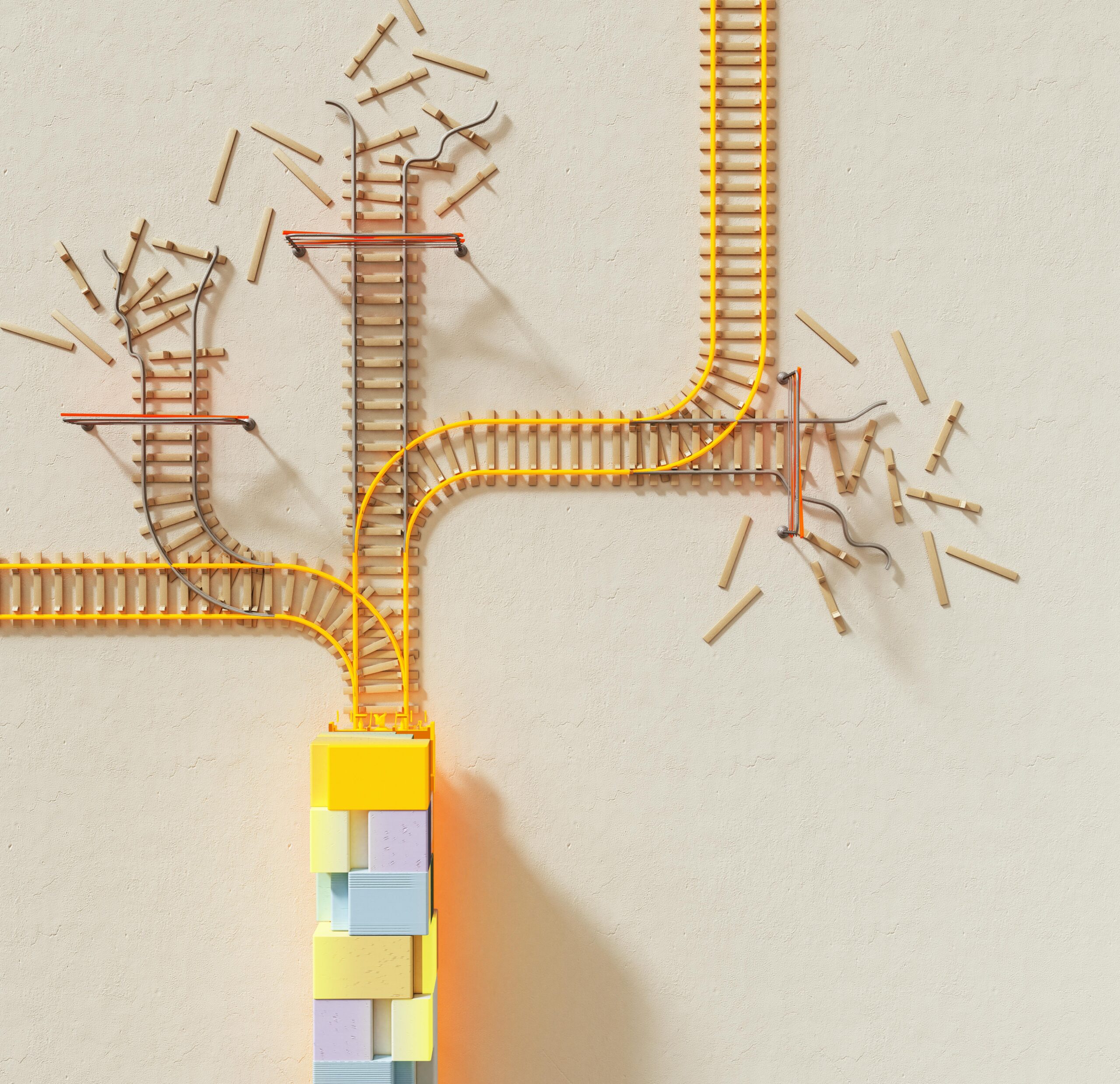

The rise of artificial intelligence and autonomous systems has transformed from science fiction into daily reality. Self-driving vehicles navigate our streets, algorithmic systems make critical decisions about healthcare and finance, and robotic technologies increasingly operate without human intervention. Yet beneath this technological marvel lies a complex web of moral questions that society must address before these innovations become irreversibly embedded in our infrastructure.

The conversation surrounding autonomous machines extends far beyond technical capabilities. It touches the very core of human values, challenging us to define what ethical behavior means when machines make decisions that affect human lives. As we stand at this critical juncture, understanding the moral imperative behind autonomous technology becomes not just an academic exercise, but a societal necessity.

The Foundation of Machine Ethics: Why Morality Matters in Automation

When we delegate decision-making authority to autonomous systems, we implicitly transfer aspects of our moral agency to these machines. This transfer raises fundamental questions about responsibility, accountability, and the nature of ethical reasoning itself. Unlike human decision-makers who draw upon emotional intelligence, cultural context, and lived experience, autonomous machines operate through algorithms and data patterns.

The importance of embedding ethical considerations into autonomous systems becomes apparent when examining their potential impact. These machines increasingly operate in domains where their decisions carry significant consequences—from medical diagnostics that determine treatment paths to financial algorithms that decide loan approvals. Each decision point represents an opportunity for either upholding or violating fundamental ethical principles.

Consider the autonomous vehicle faced with an unavoidable accident scenario. Should it prioritize passenger safety above all else, or should it calculate outcomes that minimize total harm, potentially sacrificing its occupants? This modern interpretation of the classic trolley problem illustrates how autonomous machines force us to make explicit the moral calculations that humans might make instinctively or subconsciously.

Programming Morality: The Technical Challenge of Ethical Implementation

Translating human ethical frameworks into machine-readable code presents unprecedented technical and philosophical challenges. Moral philosophy offers multiple competing frameworks—utilitarianism, deontological ethics, virtue ethics—each with different implications for how autonomous systems should behave. Programmers and ethicists must somehow encode these nuanced philosophical positions into binary logic.

The challenge intensifies when considering cultural variations in ethical standards. What constitutes ethical behavior varies significantly across societies, religions, and cultural contexts. An autonomous system deployed globally must somehow navigate these differences without imposing one cultural framework over others—a task that proves extraordinarily complex in practice.

Accountability in the Age of Algorithms: Who Bears Responsibility? ⚖️

One of the most pressing ethical questions surrounding autonomous machines concerns accountability. When an autonomous system causes harm, determining responsibility becomes problematic. Traditional legal and ethical frameworks assume human agency, but autonomous systems blur the lines between tool and agent.

Multiple parties could potentially bear responsibility for autonomous system failures:

- The engineers who designed and programmed the system

- The companies that developed and deployed the technology

- The organizations that chose to implement autonomous solutions

- The regulatory bodies that approved their use

- The end users who activated or relied upon the systems

This distributed responsibility creates what scholars call an “accountability gap”—situations where harmful outcomes occur but no single party can be held fully responsible. This gap threatens fundamental principles of justice that require identifiable responsible parties for redress and correction.

Building Accountability Frameworks for Autonomous Systems

Addressing the accountability gap requires developing new frameworks that acknowledge the unique nature of autonomous systems while preserving meaningful accountability. Some proposed solutions include mandatory audit trails that document decision-making processes, certification requirements for autonomous systems deployed in critical domains, and insurance mechanisms that ensure compensation regardless of fault determination.

Transparency emerges as a crucial component of accountability. When autonomous systems operate as “black boxes” with inscrutable decision-making processes, holding anyone accountable becomes nearly impossible. The push for explainable AI reflects recognition that accountability requires understanding how and why systems reach particular decisions.

The Bias Dilemma: Ensuring Fairness in Automated Decision-Making

Autonomous machines learn from historical data, and historical data inevitably reflects human biases and societal inequities. When these biased datasets train autonomous systems, the resulting machines perpetuate and potentially amplify existing discrimination. This represents one of the most ethically troubling aspects of autonomous technology deployment.

Real-world examples demonstrate the severity of this problem. Facial recognition systems have shown significantly higher error rates for people with darker skin tones. Algorithmic hiring tools have demonstrated gender bias, screening out qualified candidates based on characteristics correlated with sex. Predictive policing systems have reinforced patterns of over-policing in minority communities.

The technical challenge lies in defining fairness itself. Computer scientists have identified numerous competing mathematical definitions of fairness, many of which prove mutually exclusive. An algorithm cannot simultaneously optimize for all fairness criteria, forcing difficult choices about which conception of fairness takes priority.

Strategies for Mitigating Algorithmic Bias

Addressing bias in autonomous systems requires multi-faceted approaches combining technical interventions, diverse development teams, and ongoing monitoring. Organizations must carefully curate training data to identify and correct for historical biases, though this process itself raises questions about appropriate intervention levels.

Diverse development teams bring multiple perspectives that help identify potential biases that homogeneous groups might overlook. Including ethicists, social scientists, and representatives from affected communities in the development process helps surface ethical concerns before deployment.

Continuous monitoring after deployment remains essential, as systems may develop unexpected biases when encountering real-world data that differs from training datasets. Regular audits and bias assessments should become standard practice for any organization deploying autonomous decision-making systems.

Privacy and Autonomy: Balancing Innovation with Individual Rights 🔒

Autonomous machines frequently require vast amounts of data to function effectively. Self-driving cars continuously collect information about their surroundings, smart home devices monitor household activities, and recommendation algorithms track user behavior patterns. This data collection raises significant privacy concerns that intersect with fundamental ethical principles about individual autonomy and dignity.

The ethical imperative extends beyond simply protecting data from breaches. It encompasses questions about informed consent, data ownership, and the right to opt out of automated systems. Many autonomous systems operate pervasively enough that individuals cannot meaningfully avoid them, raising concerns about coerced participation in surveillance ecosystems.

| Privacy Concern | Autonomous System Example | Ethical Implication |

|---|---|---|

| Continuous Surveillance | Smart city sensors | Loss of anonymity in public spaces |

| Behavioral Profiling | Recommendation algorithms | Manipulation of choices and preferences |

| Data Aggregation | IoT device networks | Unexpected inferences about individuals |

| Predictive Analytics | Credit scoring systems | Pre-emptive discrimination based on predictions |

Privacy-Preserving Autonomous Systems

Technical approaches like federated learning, differential privacy, and edge computing offer methods for building autonomous systems that minimize privacy intrusions. These approaches allow systems to learn from data without centralizing sensitive information, processing data locally rather than transmitting it to central servers.

However, technical solutions alone prove insufficient. Ethical deployment of autonomous systems requires robust consent mechanisms, clear data governance policies, and meaningful user control over personal information. Individuals should understand what data autonomous systems collect, how that data gets used, and have realistic options to limit data collection when desired.

The Human Element: Preserving Meaningful Human Control

As autonomous systems grow more capable, questions emerge about appropriate levels of human oversight. Complete human control defeats the purpose of automation, yet fully autonomous operation raises concerns about accountability and the preservation of human agency. Finding the right balance represents a crucial ethical challenge.

The concept of “meaningful human control” has emerged as a potential framework for navigating this tension. This principle suggests that humans should remain substantively involved in consequential decisions, even when autonomous systems provide recommendations or execute routine operations. The challenge lies in defining what constitutes “meaningful” control in various contexts.

In some domains, such as autonomous weapons systems, many ethicists argue that meaningful human control must include human decision-making authority over life-and-death decisions. In other contexts, like automated content moderation, the appropriate level of human involvement remains contested and context-dependent.

Designing for Appropriate Human-Machine Collaboration

Effective human-machine collaboration requires thoughtful interface design that keeps humans appropriately engaged without overwhelming them with constant interventions. Systems must provide relevant information at appropriate times, maintain human situational awareness, and make it easy for humans to intervene when necessary.

The risk of over-reliance on automation—sometimes called automation bias—represents another ethical concern. When humans defer too readily to machine recommendations, they may fail to catch errors or consider important contextual factors that machines overlook. Designing systems that promote appropriate trust without excessive deference remains an ongoing challenge.

Looking Forward: Building Ethical Frameworks for Tomorrow’s Technology 🌟

The rapid pace of autonomous technology development outstrips our ability to fully anticipate ethical implications. This reality demands adaptive ethical frameworks capable of evolving alongside technology. Rather than seeking comprehensive solutions to all possible ethical challenges, we must build processes for ongoing ethical reflection and adjustment.

Multi-stakeholder engagement represents a crucial component of robust ethical frameworks. Technology developers, ethicists, policymakers, affected communities, and the broader public all bring valuable perspectives. Inclusive processes that incorporate diverse voices help identify ethical concerns that might otherwise go unnoticed and build social legitimacy for resulting frameworks.

Education plays a vital role in preparing society for the ethical challenges of autonomous systems. Engineers need training in ethical reasoning and the social implications of their work. Policymakers require technical literacy to craft effective regulations. The public needs understanding of how autonomous systems work and what rights they possess regarding these technologies.

The Role of Regulation and Governance

Government regulation provides one mechanism for enforcing ethical standards in autonomous systems, but regulation faces challenges in keeping pace with rapid technological change. Overly prescriptive regulations risk becoming obsolete quickly, while excessively flexible frameworks may lack sufficient enforcement mechanisms.

Alternative governance approaches include industry self-regulation, professional certification for AI practitioners, and multi-stakeholder governance bodies. Each approach offers advantages and limitations, and most effective governance will likely combine multiple mechanisms tailored to specific technological domains and applications.

Embracing the Moral Imperative: A Collective Responsibility

The ethical landscape surrounding autonomous machines presents no easy answers or simple solutions. Yet the absence of simple solutions does not diminish the moral imperative to grapple seriously with these challenges. As autonomous systems become increasingly integrated into society, their ethical dimensions demand sustained attention from all stakeholders.

Technology developers bear special responsibility to consider ethical implications throughout the design and development process, not as an afterthought but as a core component of innovation. This requires moving beyond narrow metrics of technical performance to consider broader social impacts and potential harms.

Policymakers must craft frameworks that protect fundamental rights and values while enabling beneficial innovation. This demands ongoing dialogue with technical experts, ethicists, and affected communities to understand both technological possibilities and ethical implications.

Citizens and civil society organizations play crucial roles in articulating societal values and holding developers and policymakers accountable. Public discourse about autonomous technology should extend beyond technical capabilities to engage with fundamental questions about the kind of society we want to build.

The moral imperative behind autonomous machines ultimately reflects our collective responsibility to ensure that technological progress serves human flourishing. Autonomous systems offer tremendous potential benefits—increased safety, efficiency, and capabilities that enhance human welfare. Realizing this potential while avoiding serious harms requires sustained ethical engagement at all levels of society.

As we navigate this complex ethical landscape, we must remain humble about the limitations of our current understanding while determined to uphold fundamental values. The decisions we make today about autonomous systems will shape society for generations. By embracing the moral imperative to develop these technologies responsibly, we can work toward a future where autonomous machines enhance rather than diminish human dignity, autonomy, and flourishing. 🌍

The journey toward ethical autonomous systems remains ongoing, requiring continuous reflection, adaptation, and commitment. No single article, framework, or regulation will resolve all ethical challenges. Instead, we must cultivate collective wisdom and sustained engagement with these crucial questions as autonomous technology continues evolving and expanding its influence across every domain of human activity.

Toni Santos is a machine-ethics researcher and algorithmic-consciousness writer exploring how AI alignment, data bias mitigation and ethical robotics shape the future of intelligent systems. Through his investigations into sentient machine theory, algorithmic governance and responsible design, Toni examines how machines might mirror, augment and challenge human values. Passionate about ethics, technology and human-machine collaboration, Toni focuses on how code, data and design converge to create new ecosystems of agency, trust and meaning. His work highlights the ethical architecture of intelligence — guiding readers toward the future of algorithms with purpose. Blending AI ethics, robotics engineering and philosophy of mind, Toni writes about the interface of machine and value — helping readers understand how systems behave, learn and reflect. His work is a tribute to: The responsibility inherent in machine intelligence and algorithmic design The evolution of robotics, AI and conscious systems under value-based alignment The vision of intelligent systems that serve humanity with integrity Whether you are a technologist, ethicist or forward-thinker, Toni Santos invites you to explore the moral-architecture of machines — one algorithm, one model, one insight at a time.